Gathering data

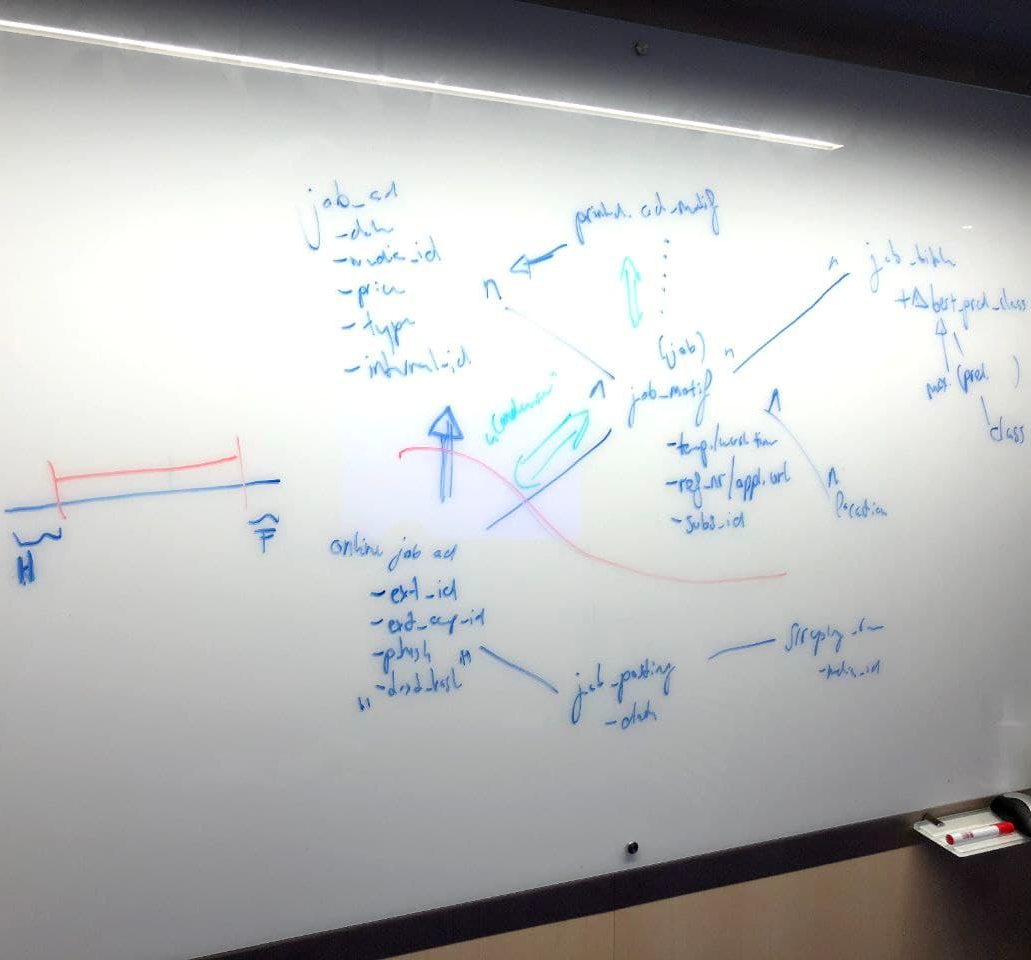

Internal data value. Business processes constantly generate data that lies unused and distributed across a variety of different systems. The transfer of this data into a usable infrastructure is essential. Our experience helps finding the right trade-off between financial viability and data volume.

External sources. The use of external data is often neglected because the effort of evaluation and matching seems high. We are experts in web scraping and oversee the collection of Media Summary's daily, nationwide ad data. Our scraping results are enriched using AI and matched just-in-time with the Media Summary database.

Analysing data

Data Analysis. Our team of interdisciplinary Data Scientists analyzes problems in terms of potential Machine Learning solutions. In order to find the optimal approach, we invest considerable time in tracking the current state of research. This allows us to constantly add innovative tools to our infrastructure and offer flexible solution strategies.

Training und Evaluation. The development of AI systems has parallels to competitive sports: Without hard work, potential remains untapped. We love competition and our team spurs each other on to top performance. We place particular emphasis on practical solutions which is why we evaluate our models extremely critically.

Utilize insights

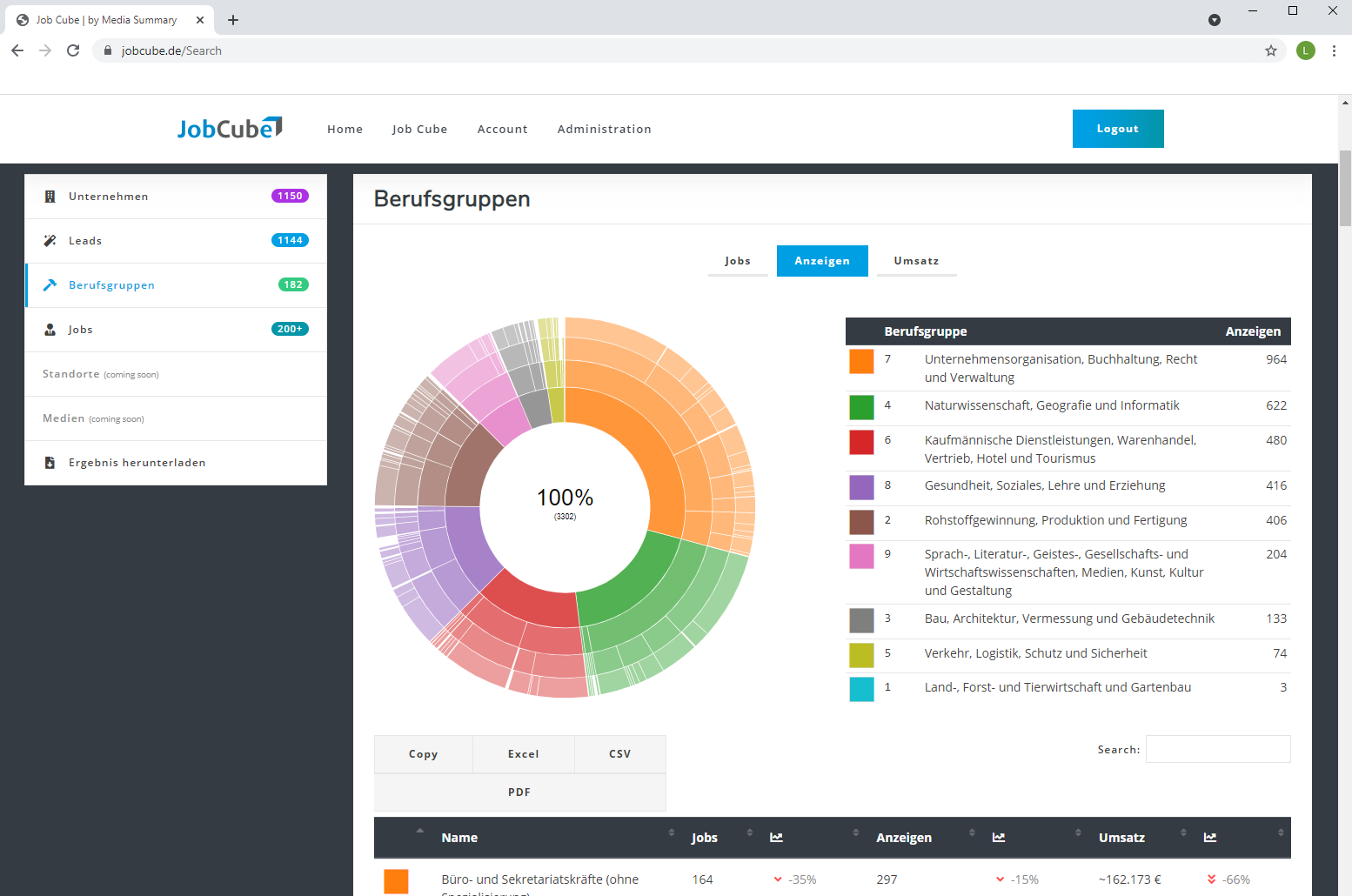

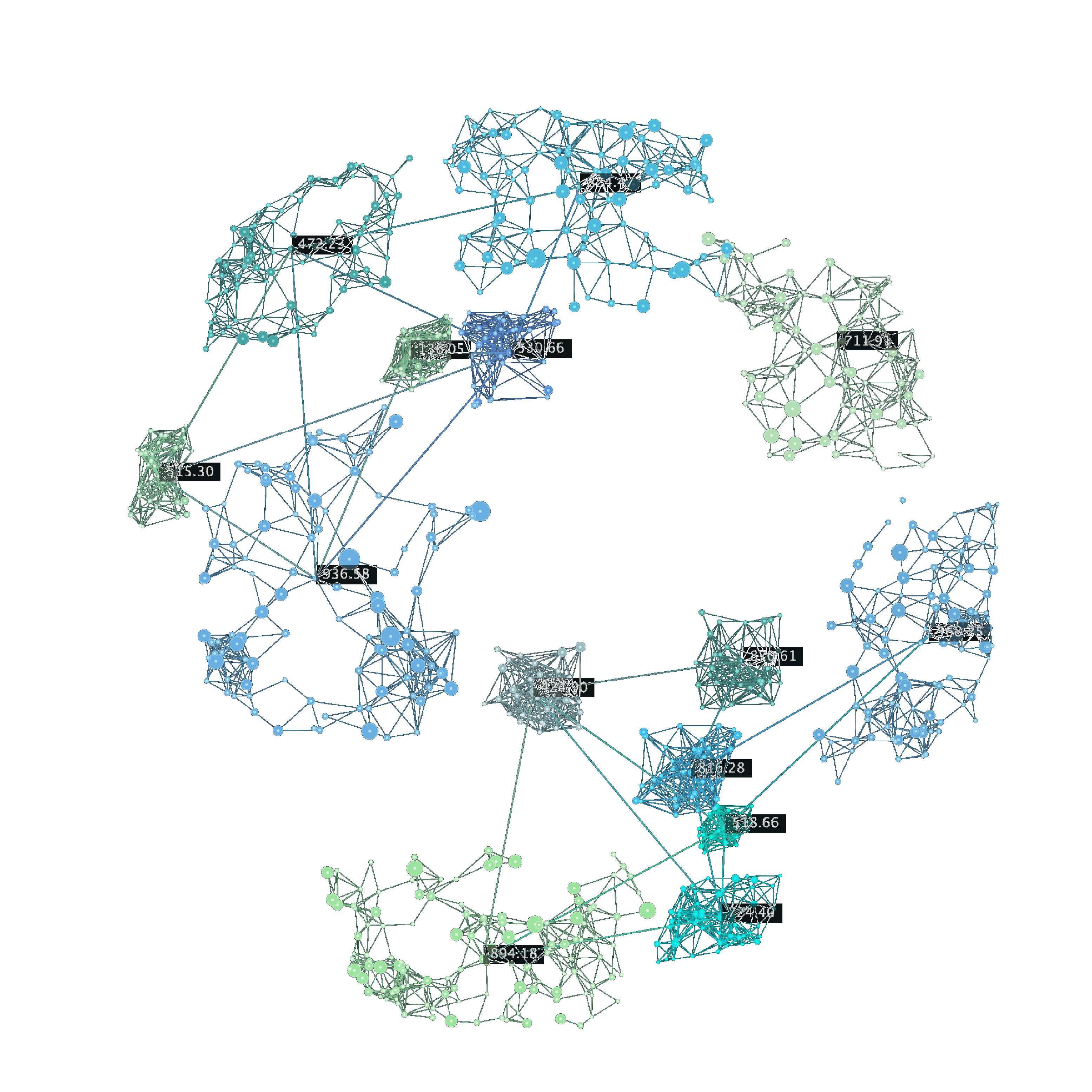

Simple visualization. Simplistic visualization Digitalization makes data spaces and interrelationships appear more and more complex. We understand perfectly how to visualize them in a comprehensible way. For this purpose, we develop modern, intuitive web applications. One of our data journeys leads to the Job Cube, which provides insight into the job market.

Seamless integration. AI systems can completely replace manual efforts. However, they often also support decision-making. Our goal is therefore always seamless integration into business processes. This increases both acceptance and value creation and determines the success of the data journey.